MidwayR3 Specifications

General Design

MidwayR3 consists of login and 4 shared compute nodes. The total installed storage on MidwayR3 is 441TB. The system utilizes Slurm as its workload manager, which is responsible for scheduling and managing the allocation of resources for various computational tasks and jobs. Additionally, it leverages the software environment module system to activate and manage the installed software packages. This module system allows users to dynamically configure their environment to access the specific software tools they need, ensuring a streamlined and efficient workflow.

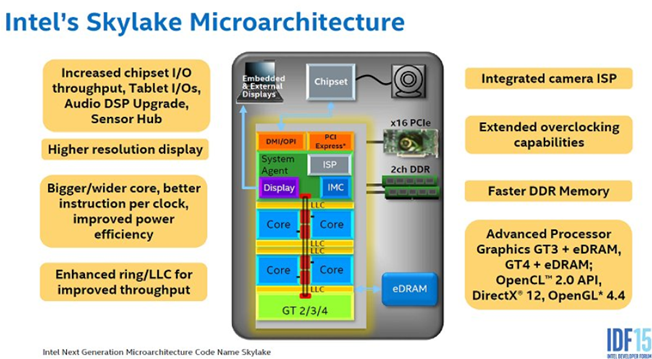

Compute Nodes: As of writing this document, MidwayR hosts four compute nodes with the following specifications:

- CPUs: Two Intel Xeon Gold 6148 per node

- CPU code name: Skylake

- Processor Base Frequency: 2.4GHz

- Maximum Turbo Frequency: 3.7 GHz

- Number of cores per processor: 20 cores

- Total number of cores per node: 40 cores

- Cache: 27.5 MB L3

- Memory: 96GB of TruDDR4 2666 MHz RDIMM memory

- ECC Memory supported

Login Nodes: MidwayR3 login nodes are accessible only from the secure data enclave virtual desktop with the hostname sde-login1.rcc.uchicago.edu. The login nodes have the following specifications:

- CPUs: Two Intel Xeon Gold 6130 per node

- CPU code name: Skylake

- Processor Base Frequency: 2.1GHz

- Maximum Turbo Frequency: 3.7 GHz

- Number of cores per processor: 16 cores

- Total number of cores per node: 32 cores

- Cache: 22 MB L3

- Memory: 96GB of TruDDR4 2666 MHz RDIMM memory

- ECC Memory supported

Network:

- All the compute nodes and storage are tightly connected through Mellanox EDR Infiniband network:

- Up to 100Gb/s full bi-directional bandwidth per port

- Ultra low latency

- Adaptive routing

- Port mirroring

- Congestion control

- Quality of service enforcement

- 10Gbps Intel Ethernet Adapter

Storage

/homeand/projectfile systems are shared across all nodes.- Total storage for directories in

/homeis 21TB. - Total storage for directories in

/projectis 420TB. - Each PI initially allocated 500GB of storage with some additional options available departments contributing to Cluster Partnership Program.

- Each user is granted a storage quota of 30GB for the home directory.

- There is no scratch filesystem.

Operating System

MidwayR3 runs the Linux operating system designed and configured for distributed computing for solving computationally intensive tasks

MidwayR3 uses Simple Linux Utility for Resource Management (SLURM) as the job scheduler. The Slurm Workload Manager is an open source job scheduler used by many of the world’s supercomputers and High- Performance Computing (HPC) clusters to submit, manage, monitor, and control your jobs running on MidwayR.

Software

MidwayR3 is equipped with various tools and software packages. While the full list can be retrieved using module avail command, the selected software is provided below:

- Stata, R, Python, GCC, Go, Afni, Ample, Apptainer, Gurobi, Git, Knitro, Julia, MKL, NetCDF, HDF5, PostgreSQL, Rstudio, etc.